Stop using LLMs for generating content

Much of the fear surrounding the growth of AI, is the potential (and perceived) threat of machines replacing humans. This is intuitively not an unreasonable fear to hold (despite there being little in the way of evidence to support it1.)

In August 2024, around the time of the Olympics, Google were forced to pull an advert for Gemini that depicted a child writing a fan letter to their sports hero. The public backlash was brutal. “This is not what AI is for” was the general sentiment.

They defended it by saying:

“We believe that AI can be a great tool for enhancing human creativity, but can never replace it,” the statement said. “Our goal was to create an authentic story celebrating Team USA.”

Yet their ongoing marketing around Gemini doesn’t support this this statement.

As Ted Chiang wrote in the New Yorker in August:

We are entering an era where someone might use a large language model to generate a document out of a bulleted list, and send it to a person who will use a large language model to condense that document into a bulleted list. Can anyone seriously argue that this is an improvement?

This is exactly the scenario that Google are pushing in their marketing. (Amongst others).

In recent a sponsored webinar with The Register, they extolled the benefits of Gemini in Google Workspace where one of the presented case studies was specifically around using Gemini to help a business worker draft an email, wherein the recipient then uses Gemini to summarise the email.

Human effort minimised. Human input also minimised.

Isn’t this the sort of job security threat we should be fearing?

Should we or shouldn’t we

Not necessarily. There is some important nuance to the threat, though.

When examining these uses of AI, it’s worth analysing which component of the interaction we are instructing the machines to actually replace.

This is really the existential crisis that everyone should be worried about.

Asking an AI to write a fan letter is a clear case of something that you (as a human) inherently know you shouldn’t be asking an AI to do. If the point of a fan letter is a heartfelt message of support or adoration, then asking a machine to do this for you is disingenuous.

Whereas in the Gemini workspace example, it seems likely that the email exchange established ‘for the record’ whatever audit trail may be required to show what was happening and how some business decision had been arrived at. But if, as depicted in the example, we could mostly outsource those interactions to machines, then it tends to suggest there was no point or value to the interaction in the first place, i.e., the performative dance of the emails could be completely removed and the machines could be allowed to operate autonomously.

And these are exactly the sorts of task that machines should be used for. If there are inefficient business processes that ‘just need to happen’ then machines are fundamentally better and more suited to doing them. Humans are slow, clumsy and quite annoying.

In this case, we have outsourced the process or action to the machine; but, crucially, not the important bit: the thinking, decision-making and considerations that led to it. Not only are machines incapable of this sort of work, but more importantly, we should not even consider charging machines with this work.

There are some thing we categorically shouldn’t outsource to the machines: screening job applications is one.

If you can’t be bothered to write it, I can’t be bothered to read it

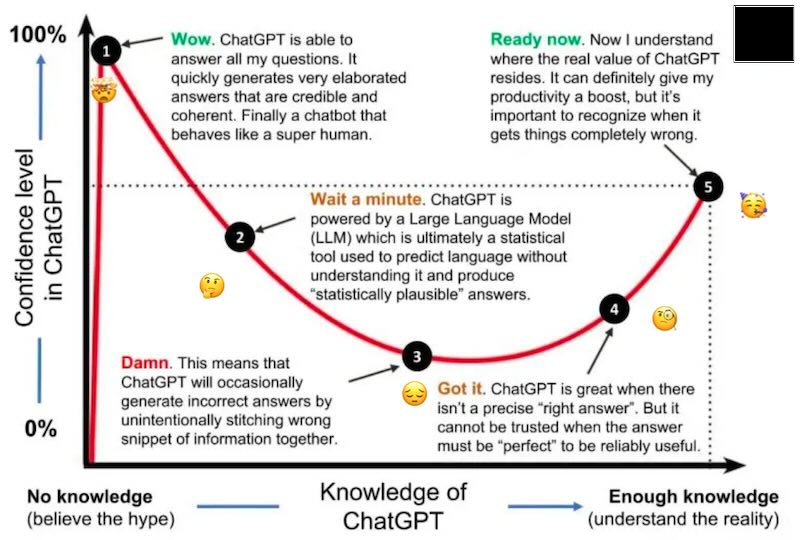

In my recent talk to the AGC, I estimated where we were sat on the AI / LLM hype cycle, and urged caution around the use of AI for generating content:

In 2024, we are now much closer to the bottom of that trough. People can spot AI text and images a mile off and have become tired (or worse) of it.

If you’re using LLMs to create text for other people, ensure that you (a) refine your prompts to match your desired style as much as possible and (b) avoid posting verbatim without providing your own effort.

I am so bored of AI-generated text and images on social media now. It’s easy to spot and tells me one thing: you couldn’t spend the time to formulate your own thoughts.

Or in other words: if you can’t be bothered to write it, then I can’t be bothered to read it. (Or even get an AI to summarise it for me.)

Humanity is ours

I think it’s perfectly reasonable to be fearful of the threat that machines pose to the modern world. It’s easy to visualise some disastrous situations. But they will only materialise if we allow them to.

Whilst we retain a strong grip on our humanity, the machines won’t be able to take it away from us. Anyone who gives it away freely to the machines will have no comeback.

-

The currently popular way to reframe this fear is: “Don’t fear the AI; fear the human who knows how to use the AI” ↩︎

Posts in this Series

- Stop using LLMs for generating content

- AGC Conference 2024 - 12 months in AI

- Why we removed all LLM-generated content from cortex.gg

- Building a website with AI - the state of ChatGPT

- Coding with an AI Sidekick - How ChatGPT Facilitated the Creation of DipnDine.gg

- Can AI ever be sentient? On LaMDA and the wider debate