AGC Conference 2024 - 12 months in AI

The Association of Guernsey Charities hold an annual conference for the members where they meet and discuss all sorts within the charitable sector in Guernsey. This includes a series of talks, workshops and focus groups.

I was asked to present in 2023 on the topic of AI - specifically how charities can start using AI to help them. I elected to broaden the talk to also cover the effective use of SaaS as well as AI. They asked me back in 2024 to give an update.

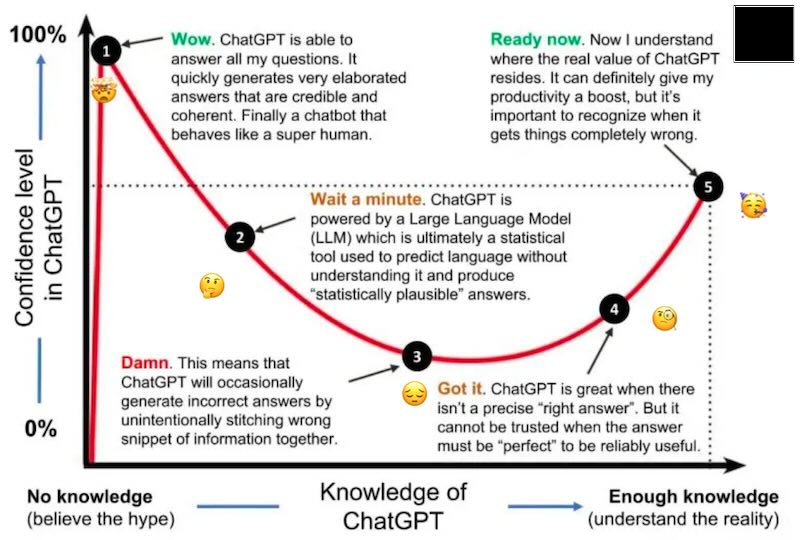

Pitching AI to a room full of non-technical people is an interesting proposition. For the mostpart, they only really want to know what it can do and see the opportunity for use within their organisation, but it would be remiss of someone in my position to not present a full picture, warts and all.

After spending some time talking about some of the good news stories of AI, and of the many, many bad news stories, including the many ways that AI is destroying the planet, we decided to focus on LLMs in 2024.

Major advances in LLMs in the 12 months to October 2024

- The context window

‘Context window’ is a term describing the size of an LLMs ‘memory’ when it comes to the conversations it’s having with you; i.e., the length of the interaction and the abilty to refine the tokens throughout. There is a greater scope now to start with a very broad question and then iteratively drill into this through the conversation to focus on key areas. Context windows everywhere are getting bigger, resulting in a richer experience.

- Image and video generation capabilities

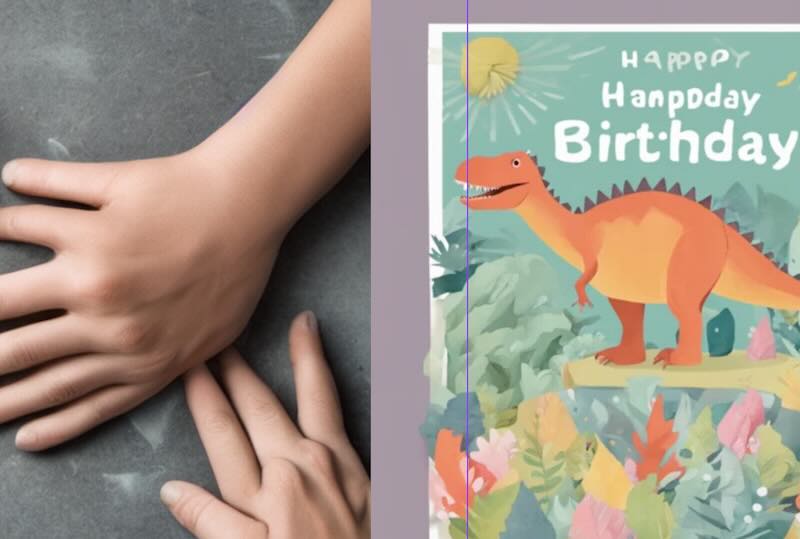

Advances have been made in the generation of images, and many of the early issues we experienced with e.g., Dall-E and their issues with fingers and rendering words have been resolved (or at least improved.)

This came with a warning over the growth of image and video fakery!

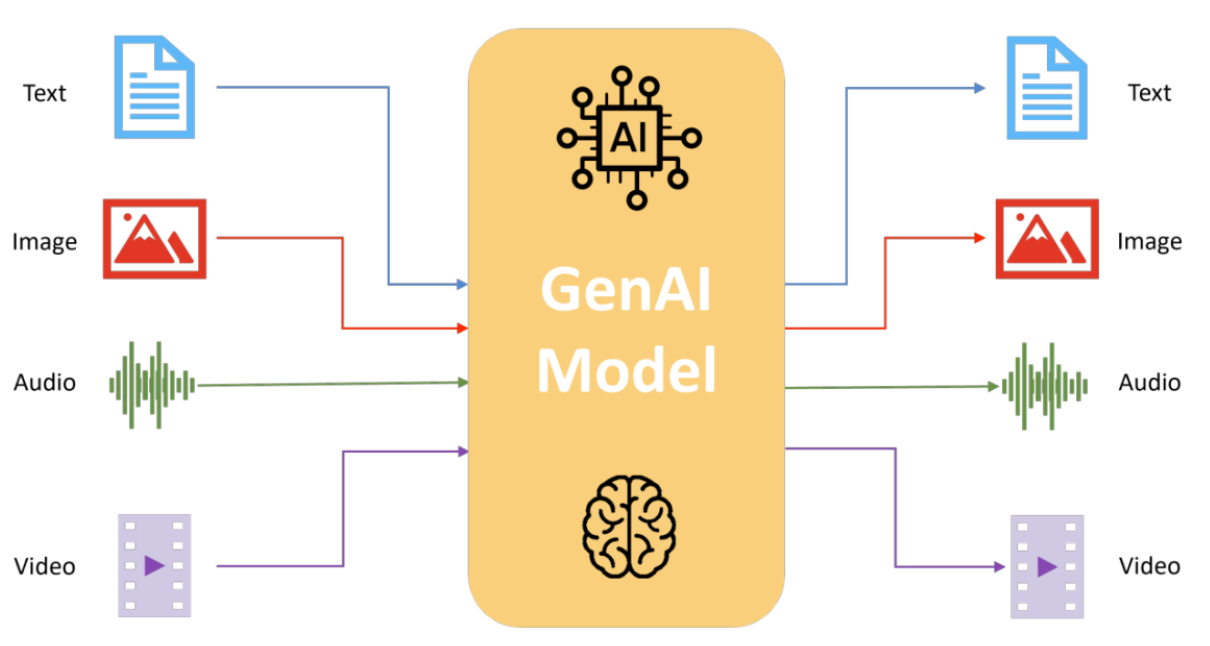

- Multi-context GenAI

Perhaps the most intriguing development is the growth of non-text based AI interactions - the so-called Multimodal AI; in other words the ability for an LLM to ingest text, image, audio and video formats, and then spit out text, image, audio and video.

To demonstrate this, we used VidNoz to do a live presentation of animated Stephen Fry…

(although arguably our test video of the Hoff was better:)

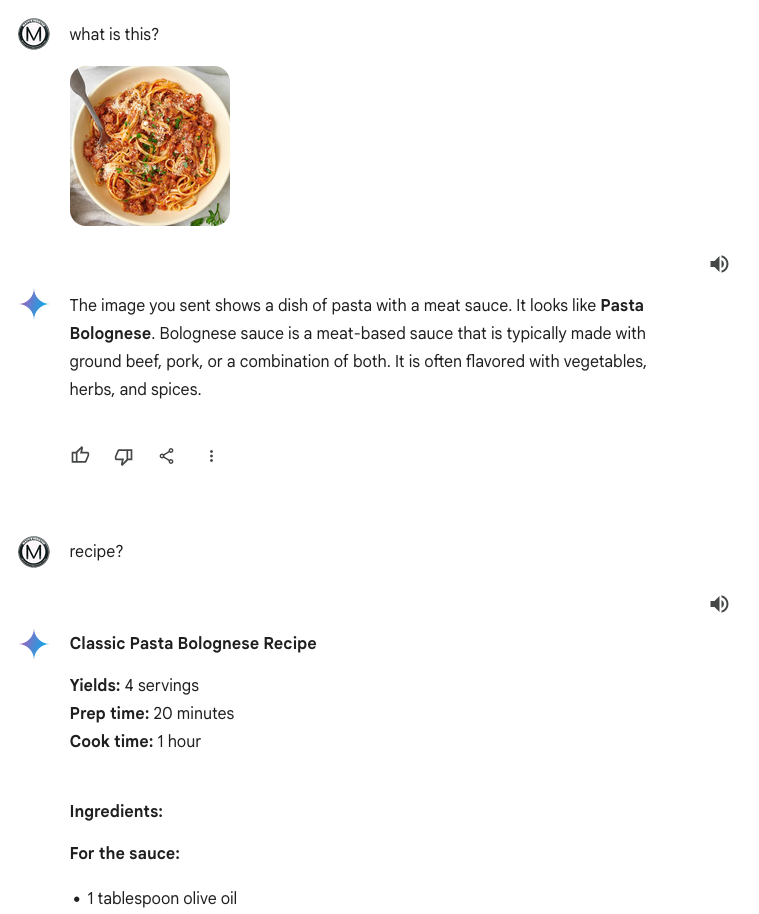

as well as asking Gemini to identify an audience member’s dinner:

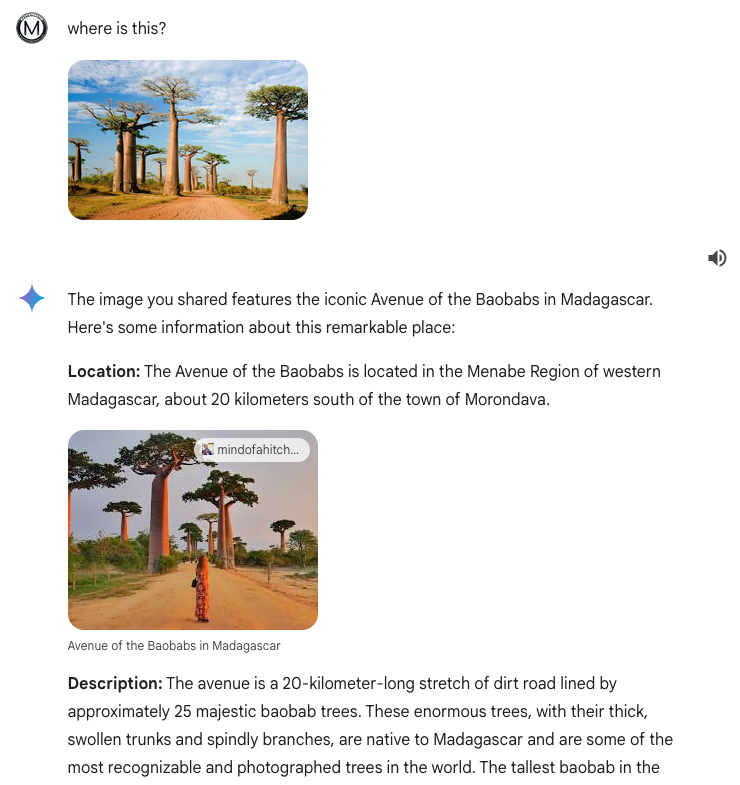

and another’s holiday destination:

Pretty neat!

The Warnings

Inevitably, there had to be a sanity check. LLMs can do some interesting things, but users need to exercise some caution.

- Be skeptical; fact check any claims

It is well-known that LLMs hallucinate… that is to say - they lie. They make stuff up. It is generally preferable to use LLMs for limited scope activities where the output is quantifiable (even though that is not foolproof) - try and avoid situations where veracity cannot be established. Don’t be the laywer who used ChatGPT to prepare his case and got found out!

- Be aware of the hype

A year ago, we were probably in between 1 and 2 on the hype chart, where the output that LLMs was creating was still unbelievable. In 2024, we are now much closer to the bottom of that trough. People can spot AI text and images a mile off and have become tired (or worse) of it:

Editors know that audiences want to read words (like these) written by a person. While suitable for a summary, the bland, “mid” content generated by an AI lacks a human touch. It’ll do in a pinch, but leaves no one particularly satisfied.

If you’re using LLMs to create text for other people, ensure that you (a) refine your prompts to match your desired style as much as possible and (b) avoid posting verbatim without providing your own effort.

- LLMs have no moral compass

Only you can judge whether your use of an LLM is ethical or moral for what you’re doing. LLMs are biased, not-impartial, misogynistic racist machines that are easily subverted and their use for anything that directly impacts humans should be questioned. My own view is that using it to e.g., screen job applicants is not right.

- Beware copyright or licensing issues

LLMs are trained on vast quantities of data from myriad sources and the vast majority is unlicensed. There are countless lawsuits open at the moment suing the major LLMs for copyright, IP and license infringements through the way the models have been trained. A good primer is here:

Are there copyright considerations I need to think about when using genAI tools?

Yes. The legal status of AI tools is unsettled in Canada at this point in time. This is an evolving area and our understanding will develop as new policies, regulations, and case law becomes settled.

Input: The legality of the content used to train AI models is unknown in some cases. There are a number of lawsuits originating from the US that allege genAI tools infringe copyright and it remains unclear if and how the fair use doctrine can be applied. Canada remains in a similar uncertain state; it is unclear the extent to which existing exceptions in the copyright framework, such as fair dealing, apply to this activity.

Output: Authorship and ownership of works created by AI is unclear. Traditionally, Canadian law has indicated that an author must be a natural person (human) who exercises skill and judgement in the creation of a work. As there are likely to be varying degrees of human input in content generated, it is unclear in Canada how it will be determined who the appropriate author and owner of works are. More recently, the US Copyright Office published the following guide addressing these issues:Copyright Registration Guidance for Works Containing AI-Generated Materials

So be aware of the output you’re using and whether this could lead to issues for you and your organisation.

And that mostly wrapped it up. You can grab an abridged version of the slides here.

Posts in this Series

- Stop using LLMs for generating content

- AGC Conference 2024 - 12 months in AI

- Why we removed all LLM-generated content from cortex.gg

- Building a website with AI - the state of ChatGPT

- Coding with an AI Sidekick - How ChatGPT Facilitated the Creation of DipnDine.gg

- Can AI ever be sentient? On LaMDA and the wider debate